AI, accessibility, and peer review: Why ‘plain language’ isn’t always plain truth

This post is based on my research available here: https://zenodo.org/records/15744438.

Peer reviewer comments are a crucial part of academic publishing—but for many researchers, especially those with cognitive processing disabilities, they can feel more like encrypted puzzles than constructive guidance. With the rise of large language models (LLMs) like GPT-4, there’s growing interest in using AI to simplify this dense feedback. But how helpful is this assistance really?

In a recent experiment, I tested GPT-4’s ability to simplify peer review comments from academic finance, a field known for its technical rigor and precision. Each comment was rewritten by the model using two prompts—one a general plain-language request, and the other designed with cognitive accessibility in mind (e.g., for users with dyslexia or working memory challenges). My goal was to see whether these AI-generated simplifications could genuinely support inclusion while retaining the essence of what the reviewer was trying to communicate.

On the surface, GPT-4 performed well. It shortened long sentences, smoothed out jargon, and offered gentler phrasing. But dig deeper, and a different story emerges.

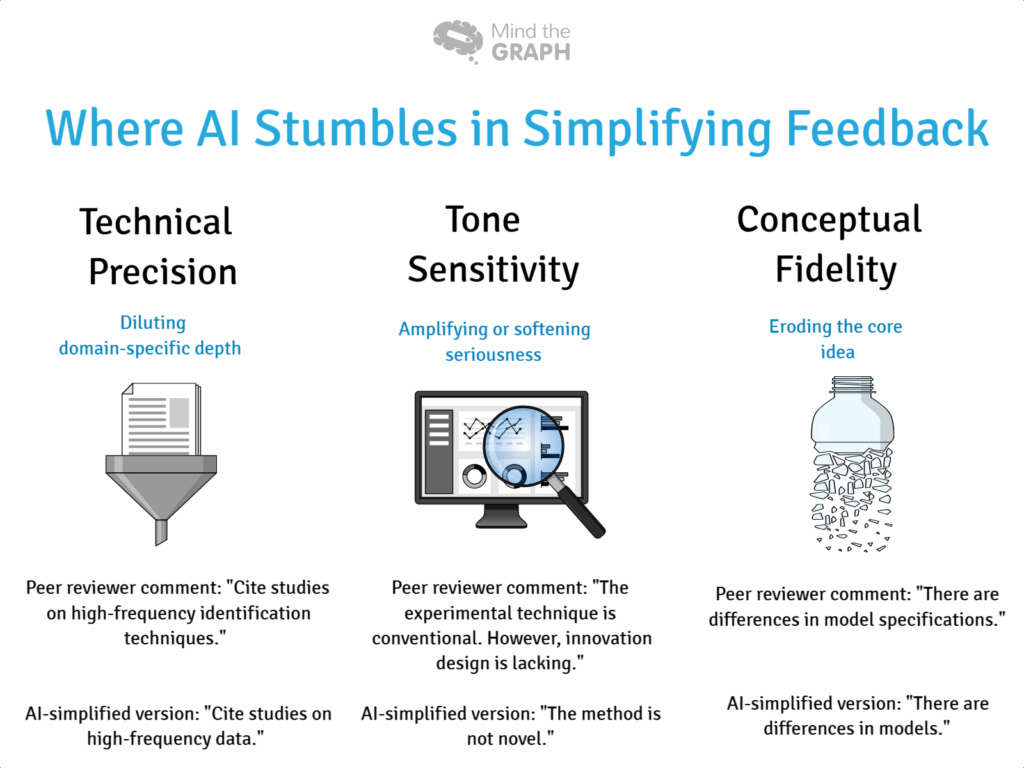

One reviewer had suggested using a difference-in-differences method to improve causal inference in a study of market reactions. GPT-4’s simplified version talked vaguely about “how quickly and how strongly the market reacts.” The method’s role in establishing causality was lost. For a researcher unfamiliar with econometric tools, this could completely distort the reviewer’s intent.

In another example, the term “endogeneity” was reduced to “hidden effects.” While this might seem like a helpful analogy, it misses the real statistical issue—correlation between variables and error terms, often requiring complex modeling adjustments. Here, the simplification didn’t clarify—it misled.

Perhaps most worryingly, the model often swapped precise technical framing with casual or even incorrect alternatives. A critique about using “standard methods” was rewritten to imply that the methods themselves caused a lack of novelty. This altered the tone from nuanced to dismissive. Another simplification described “bounded rationality” as “limited thinking ability”—a harmful misrepresentation that frames a central theory in behavioral economics as a cognitive defect.

What’s striking is that even when given a disability-aware prompt, GPT-4 did not consistently preserve meaning. Sometimes the tone improved—more respectful, more accessible—but the technical content still suffered. The simplifications were also inconsistent across runs: the same comment, simplified twice using the same prompt, often yielded different interpretations.

For researchers navigating peer review, especially those who process information differently, this inconsistency is more than a nuisance—it’s a risk. Misinterpreting feedback can delay publication, derail revisions, or undermine confidence. And for those relying on AI as an assistive tool, trust in the tool’s fidelity is paramount.

So, what should we take away from this? First, AI can help—but it cannot yet be trusted to simplify peer review comments in technical domains without oversight. Second, editors, peer reviewers, and support platforms like Editage must be mindful of how feedback is phrased. Plain language is a valuable goal, but clarity must be paired with conceptual accuracy. Finally, the future of inclusive publishing requires us to rethink not just how we simplify, but for whom—and with what guardrails.

As LLMs continue to evolve, we must ask tough questions about how they’re deployed in scholarly communication. Inclusion is not just about ease of reading. It’s about enabling full participation in the research dialogue. And that means making sure our tools don’t just sound simple—they must speak the truth, too.